Assessing Decisional Capacity for Clinical Research or Treatment: A Review of Instruments

Abstract

Objective: The need to evaluate decisional capacity among patients in treatment settings as well as subjects in clinical research settings has increasingly gained attention. Decisional capacity is generally conceptualized to include not only an understanding of disclosed information but also an appreciation of its significance, the ability to use the information in reasoning, and the ability to express a clear choice. The authors critically reviewed existing measures of decisional capacity for research and treatment. Method: Electronic medical and legal databases were searched for articles published from 1980 to 2004 describing structured assessments of adults’ capacity to consent to clinical treatment or research protocols. The authors identified 23 decisional capacity assessment instruments and evaluated each in terms of format, content, administration features, and psychometric properties. Results: Six instruments focused solely on understanding of disclosed information, and 11 tested for understanding, appreciation, reasoning, and expression of a choice. The instruments varied substantially in format, degree of standardization of disclosures, flexibility of item content, and scoring procedures. Reliability and validity also varied widely. All instruments have limitations, ranging from lack of supporting psychometric data to lack of generalizability across contexts. Conclusions: Of the instruments reviewed, the MacArthur Competence Assessment Tools for Clinical Research and for Treatment have the most empirical support, although other instruments may be equally or better suited to certain situations. Contextual factors are important but understudied. Capacity assessment tools should undergo further empirically based development and refinement as well as testing with a variety of populations.

Informed consent is a cornerstone of ethical clinical practice and clinical research (1) . Meaningful consent is possible only when the person giving it has the capacity to use disclosed information in deciding whether to accept a proposed treatment or consent to a research protocol. Legal and bioethics experts generally agree that decisional capacity includes at least four components (2 , 3) : understanding information relevant to the decision; appreciating the information (applying the information to one’s own situation); using the information in reasoning; and expressing a consistent choice. These capacities may be reduced by cognitive impairment, certain psychiatric symptoms, and situational factors such as the complexity of the information disclosed and the manner of disclosure (4 – 6) . Thus, it is fundamental to the notion of capacity that different contexts may demand different kinds or levels of functional abilities (2 , 7–12) . A lower level of decisional capacity is required for low-risk than a higher-risk treatment or research protocol, although it has not been clearly established what levels are appropriate for what decisions.

In treatment settings, formal capacity assessment has traditionally come into consideration when a patient refuses a recommended treatment (3 , 10 , 11 , 13) , although it may increasingly arise in other circumstances as physicians perceive themselves to be vulnerable to lawsuits from dissatisfied patients. In the research context, the main focus has been on how to implement informed consent for studies that enroll subjects who are at risk of having impaired decisional capacity. Despite the attention given to the topic over many decades, no consensus has emerged on how informed consent should be managed; what we currently have, as Michels observed, is “a hodgepodge of practices” (14) . Some institutional review boards require documentation of study volunteers’ capacity to consent. For example, our institutional review board at the University of California, San Diego, requires explicit assessment of decisional capacity for all protocols that involve more than “minimal risk” in studies that sample from populations considered at risk for impaired decisional capacity (15) . Indeed, over a quarter century ago, the National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research (better known as the Belmont Commission) made it clear that investigators are responsible for ascertaining that participants adequately understand information disclosed in the consent process (16) .

Over the past two decades, numerous tools have been developed to assess decisional capacity. Although some instruments have been more widely adopted than others, there is no gold standard (17 – 20) . In this article, we critically review existing instruments for assessing capacity, highlighting information about their content, administration, and psychometric properties as well as their strengths and limitations for use in particular contexts.

Method

We conducted searches on PubMed (MEDLINE), PsycINFO, ArticleFirst, LexisNexis, and Westlaw for English-language articles published from January 1980 through December 2004 describing or using structured instruments designed to assess adults’ capacity to consent to clinical treatment or research. We used search terms related to competency, decision-making capacity, consent, and assessment instruments. From the search results, we selected articles that described instruments in sufficient detail to permit evaluation of their content, administration, and psychometric properties. We excluded articles describing instruments that focus on the consent capacity of children or adolescents, advance directives, testamentary capacity, or capacity to consent to inpatient hospitalization, as consent in each of these contexts raises additional considerations beyond the scope of this review.

The search and selection process yielded 23 instruments. For each one, we abstracted information about domains assessed, administration characteristics (including question format and time required), and the nature of the standardization samples. Because determinations of decisional capacity should not be observer dependent, we examined interrater reliability. To the degree that a person’s decisional capacity is stable over brief spans of time, scores should be consistent over brief follow-up intervals, so we also looked at test-retest reliability.

For each instrument reviewed, we examined the instruments and searched the literature for evidence related to content validity and criterion validity (21) . Content validity, the degree to which the instrument’s content reflects the universe of content relevant to the constructs being measured, is usually determined on the basis of expert consensus. There is some controversy over what the appropriate content should be for decisional capacity assessment instruments and over the determinative weight the various components of decisional capacity should carry (10 , 13 , 22 , 23) . Moreover, different legal jurisdictions use different standards, which further complicates decisions on what standards to include in an instrument (8 , 11) . We examined whether each instrument’s constructs appeared to be consistent with widely accepted theory on competency and capacity (2 , 8 , 24) . There are divergent views on the four-component model, and we recognize that not everyone would agree with the validity judgments we made. This lack of consensus underscores the need for further development and refinement of instruments as well as clarification of the standards needed for different contexts and choices.

Criterion validity, the degree to which scores on a scale are associated with an accepted concurrent standard (concurrent validity) or with a future state or outcome (predictive validity), is most often assessed in terms of intercorrelations. Other useful values are the measure’s sensitivity—that is, its valid positive (in this case, impaired) rate—and specificity—its valid negative (unimpaired) rate. The accepted standard against which criterion validity is evaluated may be another established measure. In the absence of a gold standard for measuring decisional capacity, however, validation against multiple criteria is desirable (21) . The criteria could include judgments of experts on decisional capacity, although establishing criteria for “expert” status may be difficult. Limitations of this approach in the context of our study include the documented inconsistency of clinicians’ application of relevant legal standards and the frequent discordance of expert opinions on decisional capacity (25 – 28) .

Results

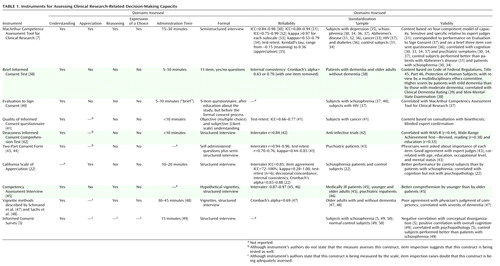

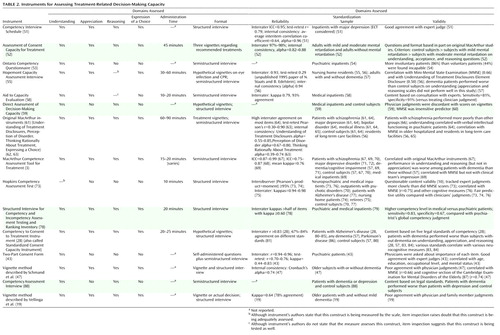

Of the 23 decisional capacity assessment instruments we identified, 10 focus on consent in clinical research ( Table 1 ) and 15 on consent in treatment ( Table 2 ); two of the instruments are used in both contexts (43 , 47) .

Capacity to Consent to Clinical Research

Two of the 10 instruments that focus on capacity to consent to a clinical research protocol—the MacArthur Competence Assessment Tool for Clinical Research and the Informed Consent Survey—are supposed to measure all four capacity domains, although whether the latter instrument adequately assesses appreciation and reasoning is debatable. Measures of understanding are included in nine instruments (5 , 7 , 38 , 40 – 45 , 47) , five of which assess only understanding (39 , 40–44) . The California Scale of Appreciation focuses solely on assessment of appreciation (although understanding is likely also tapped), and the Competency Assessment Interview focuses only on understanding and reasoning. The vignette method (47 , 48) appears to cover understanding, reasoning, and choice, although appreciation may be tapped as well.

An important variation among the instruments is whether the disclosed information and query content are established by the instrument itself or must be tailored for the specific protocol. For instance, participants may receive standard disclosures and questions, and acceptable responses to the questions may be predetermined. The California Scale of Appreciation and the Competency Assessment Interview use hypothetical study protocols and standard questions (although the California scale could be tailored). Another approach is for the instrument to specify the text of the probes (e.g., “What is the purpose of this study?”) while allowing the disclosures and acceptable responses to be tailored; this approach is used in the Evaluation to Sign Consent, the Quality of Informed Consent questionnaire, the Deaconess Informed Consent Comprehension Test, the Informed Consent Survey, the MacArthur Competence Assessment Tool for Clinical Research, the Two-Part Consent Form, and the vignette method.

The instruments vary in the degree of skill and training required of interviewers for valid administration. The Quality of Informed Consent questionnaire and the Two-Part Consent Form are self-administered; a drawback of this format is that the process does not have a built-in opportunity to ask follow-up questions. The Evaluation to Sign Consent, the Brief Informed Consent Test, the Deaconess Informed Consent Comprehension Test, the Informed Consent Survey, and the vignette method use interviews, although they all appear to require minimal to moderate training of interviewers or scorers. Training is required for administering the MacArthur Competence Assessment Tool for Clinical Research—the only instrument for which a published manual provides scoring guidelines (7) —because the items must be scored during the interview so that appropriate follow-up questions can be asked or requests for clarification elicited. The California Scale of Appreciation and the Competency Assessment Interview also require moderate training. Most of the instruments take less than 10 minutes to administer, although the more comprehensive ones take longer.

Psychometricians generally suggest that instruments to be used for clinical decision making have reliability values of at least 0.80 (21) . By this standard, most of the instruments we examined had acceptable interrater reliability, although no interrater reliability information was provided for the Brief Informed Consent Test, the Evaluation to Sign Consent, the Informed Consent Survey, and the vignette method. Test-retest reliability has been reported for four of the scales (the MacArthur Competence Assessment Tool for Clinical Research, the Quality of Informed Consent questionnaire, the Two-Part Consent Form, and the California Scale of Appreciation) ranging from –0.15 to 0.77 (the one negative correlation was for a specific subscale of the MacArthur Competence Assessment Tool for Clinical Research in one specific application study of women with depression [35] ).

When item content varies with specific use, another potential source of variance may be introduced by the disclosures and acceptable responses that are specified for the different uses. The manual for the MacArthur Competence Assessment Tool for Clinical Research gives fairly detailed instructions on preparation of the items. However, no data are available on how consistent this and other modifiable instruments are, even with trained users—that is, on how “reliable” the item content preparation phase is, or the “inter-item-writer reliability.” In the absence of such data, it is not clear whether, or under what conditions, results from these instruments can be generalized across specific uses, even when referring to similar protocols. The reliability and validity data of one version may not generalize to other versions prepared by other users.

Information about the concurrent, criterion, or predictive validity has been published for the MacArthur Competence Assessment Tool for Clinical Research (31 , 37) , the Brief Informed Consent Test (38) , the Evaluation to Sign Consent (37) , the Deaconess Informed Consent Comprehension Test (42) , the Two-Part Consent Form (43) , and the vignette method as described by Schmand et al. (47) . The external criterion was generally capacity judgments made by physicians. However, interpreting lack of agreement between “expert” judgment and subjects’ performance on the instruments themselves is problematic. Convergence with opinions from other potential experts or stakeholders (e.g., patients, family members, and legal or regulatory authorities) was rare, although judgments of some nonphysician experts have been included in studies of the Quality of Informed Consent questionnaire (41) and the Two-Part Consent Form (43) . Several reports attempted to establish concurrent validity by showing the association with general functional or cognitive measures (38 , 42 , 47) , but because decisional capacity is context- and decision-specific, such correlations are not fully germane. Finally, Cronbach’s alpha, a measure of internal consistency, was 0.69 (fair) for the Schmand et al. vignette method (47) and ranged from 0.83 to 0.88 for the California Scale of Appreciation (22) .

Capacity to Consent to Treatment

All 15 of the instruments that focus on capacity to consent to treatment ( Table 2 ) measure understanding, but only nine of them appear to assess all four capacity dimensions. Two of the remaining six instruments assess only understanding, two assess understanding and appreciation, and two assess understanding and reasoning.

Preset vignettes or content are used as stimuli in eight of the 15 instruments: the Assessment of Consent Capacity for Treatment, the Hopemont Capacity Assessment Interview, Fitten et al.’s direct assessment of decision-making capacity (60) , the original MacArthur instruments (63) , the Hopkins Competency Assessment Test, the Competency to Consent to Treatment Instrument, and the vignette methods (although Vellinga et al. [19] presented the actual treatment decision to a subset of patients). In contrast, the patient’s actual treatment decision is used in the MacArthur Competence Assessment Tool for Treatment, the Competency Interview Schedule, the Ontario Competency Questionnaire, the Aid to Capacity Evaluation, the Two-Part Consent Form, the Structured Interview for Competency and Incompetency Assessment Testing and Ranking Inventory, and the Competency Assessment Interview; and it can form the basis for the Vellinga et al. vignette method.

All 15 instruments employ structured or semistructured interviews, although the Two-Part Consent Form uses a self-administered questionnaire, which is followed by additional questions when the questionnaire is returned (43) . The degree of training needed to administer these instruments ranges from minimal, as in the Hopkins Competency Assessment Test, to more substantial, as in the Competency Interview Schedule, the Assessment of Consent Capacity for Treatment, the Ontario Competency Questionnaire, the Hopemont Capacity Assessment Interview, the Aid to Capacity Evaluation, Fitten et al.’s direct assessment of decision-making capacity (59 , 60) , the original MacArthur instruments (3 , 63) , the MacArthur Competence Assessment Tool for Treatment, the Structured Interview for Competency and Incompetency Assessment Testing and Ranking Inventory, the Two-Part Consent Form, the Competency to Consent to Treatment Instrument, the Competency Assessment Interview, and the two vignette methods. Detailed manuals to guide administration, scoring, and interpretation are available only for the Hopemont Capacity Assessment Interview, the original MacArthur Competence Study instruments (Understanding of Treatment Disclosures, Perception of Disorder, Thinking Rationally About Treatment), and the MacArthur Competence Assessment Tool for Treatment; a training video is also available for the latter. Administration time was not widely reported for these instruments, but it varies with the comprehensiveness of the evaluation.

Information on reliability was reported for 12 of the instruments. Adequate interrater reliability (≥0.80) has been reported for the Competency Interview Schedule (51) , the Assessment of Consent Capacity for Treatment (52) , the Aid to Capacity Evaluation (58) , the Hopemont Capacity Assessment Interview (unpublished 1995 paper of N. Staats and B. Edelstein), the Understanding of Treatment Disclosures, Perception of Disorder, Thinking Rationally About Treatment scales (63) , the MacArthur Competence Assessment Tool for Treatment (67 , 69) , the Hopkins Competency Assessment Test (73) , the Structured Interview for Competency and Incompetency Assessment Testing and Ranking Inventory (78) , the Competency to Consent to Treatment Instrument (28) , and the Two-Part Consent Form (43) . Data on internal consistency have been reported for the Competency Interview Schedule (51) , the Hopemont Capacity Assessment Interview (56) , and the original MacArthur instruments (59 , 63 , 88) ; for the latter, internal consistency seemed to vary with the study population, with higher consistency reported for hospitalized psychiatric patients than for cardiac patients and healthy community samples. The authors of the Competency Interview Schedule (51) and Schmand et al. (47) used interitem correlations to evaluate internal consistency. Test-retest reliability has been reported for only four of the scales—the Competency Interview Schedule (51) , the Hopemont Capacity Assessment Interview (unpublished 1995 paper of N. Staats and B. Edelstein), the original MacArthur instruments (63) , and the Two-Part Consent Form (43) . For the seven instruments with variable item content, no data have been published on the reliability of item preparation or on associations between versions prepared by different users.

Data related to concurrent, criterion, or predictive validity have been published for all of the treatment-consent capacity instruments except the Competency Assessment Interview. In most cases, the external criterion was judgments of decisional capacity made by physicians. Data on the various instruments’ ability to discriminate between patients who were judged by experts as competent and those who were judged incompetent were reported for the Competency Interview Schedule (51 , 89) , the Aid to Capacity Evaluation (58) , the Hopkins Competency Assessment Test (73 , 76) , Fitten et al.’s direct assessment of decision-making capacity (59) , the MacArthur Competence Assessment Tool for Treatment (69 , 70) , the Structured Interview for Competency and Incompetency Assessment Testing and Ranking Inventory (78) , and the two vignette methods (19 , 47) . For Fitten et al.’s assessment instrument, the MacArthur Competence Assessment Tool for Treatment, and both vignette methods, performance on the instrument did not correspond to physicians’ judgments of older patients’ global competency; this lack of correspondence was interpreted as indicating that clinicians were relatively insensitive to the decisional impairment of these study subjects. Performance on cognitive tests was correlated with decisional capacity scores in some cases (47 , 56 , 64 , 69 , 76) but not in others (59 , 60 , 73 , 74 , 76) . Such findings are consistent with the notion that decisional capacity is a construct distinct from cognitive domains, although cognitive factors are important in the measured abilities. The degree of convergence between the scale’s results and opinions from family members was evaluated in Vellinga et al.’s vignette method (19) ; family members’ judgments of subjects’ competency did not correspond well to results on the instrument.

Discussion

We identified 23 instruments for assessing capacity to consent to research protocols (N=10) or to treatment (N=15) (two instruments were used in both contexts). With a few exceptions, the instruments focused mostly on the understanding component of decisional capacity. There is a clear need to refine measurement of the other capacity domains.

Selection of an instrument would depend on the context in which it is to be used. Common uses include making definitive capacity determinations, screening to identify individuals who need further evaluation or remediation (30 , 40) , and evaluating capacity as a dependent variable in decisional capacity research (5 , 30 – 35 , 37 , 62 , 63) .

A fundamental challenge in selecting an instrument is the lack of consistency across instruments in what is being measured, despite the use of similar labels for these constructs. Definitions of “reasoning” vary from the ability to provide “rational reasons” for one’s choice (28 , 51) to making the “reasonable” choice in a given situation (28) to the underlying cognitive processes used in reaching a decision (e.g., “consequential” and “comparative” reasoning in the MacArthur Competence Assessment Tools for Clinical Research [7] and for Treatment [67] ). Definitions and measurements of appreciation are also variable, with the focus ranging from appreciation of the consequences of a choice (28) to acknowledgment of the presence of a disorder and its treatment potential (63) to the absence of “patently false beliefs” driving one’s appreciation (22) . Even the assessment of understanding varies from requiring simple repetition of the interviewer’s words or the wording on a consent form to more detailed evaluations of deeper comprehension; it also may incorporate the person’s ability to retain information. Given these variations, those who work in the field of capacity assessment need to develop consensus on the appropriate definitions and standards for measuring each domain. This will be a key task as the field moves forward, although we do not anticipate that it will be an easy one.

A primary concern in developing a capacity assessment measure, as Appelbaum and Grisso wrote with regard to the original MacArthur Treatment Competence Study, is that the functional abilities being assessed “should have close conceptual relationships with appropriate standards of competence” (62) . Thus, for instance, a test of general cognitive abilities, such as the Mini-Mental State Examination (90) , would not be an appropriate instrument for gauging the more specific, context-dependent ability to understand disclosed material about a recommended treatment (12 , 60 , 69 , 91) . Although we have pointed out where instruments were correlated with more general measures, we do not believe such correlations constitute a strong line of evidence in favor of validity. Rather, the accumulation of data supporting a variety of types of validity will be the best evidence of validity. Moreover, validity should be understood as something arrayed along a continuum rather than either present or absent. Given questions about whether any instrument can gauge decisional capacity adequately without considering contextual and individual factors, validity will likely remain an imperfect aspect of these assessment tools.

Another consideration is how the risks or risk-benefit ratio of a treatment or research protocol affect the defining of minimally acceptable levels of understanding, appreciation, and reasoning for consent. There appears to be general agreement that flexibility is needed in setting thresholds and that these thresholds should depend on the type of decision being made (7) . Thus, for example, it may be appropriate to require a greater capacity for consent for a high-risk protocol than for a low-risk one. It may also be appropriate to weight the various subdomains of capacity differently in different contexts; for example, full appreciation that one has a disorder that requires treatment may be more relevant when consent is sought for participation in a randomized, controlled trial than for a nonintervention or observational study. How to operationalize the thresholds and weighting of such subdomains in a given context is less clear, however, and has received little empirical attention.

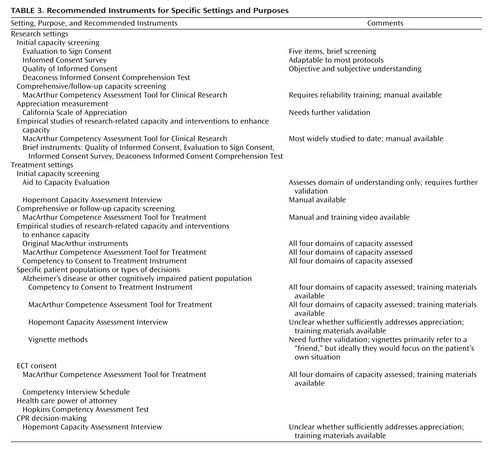

It must be acknowledged that every instrument has limitations. As a general recommendation, however, the best choices for measuring capacity to consent to research and treatment, given their comprehensiveness and supporting psychometric data, will frequently be the MacArthur Competence Assessment Tools for Clinical Research and for Treatment, respectively. Of the instruments we examined that focus on research, the MacArthur Competency Assessment Tool for Clinical Research has been the most widely adopted, and, as a result, numerous lines of evidence supporting its reliability and construct validity have accumulated (30 – 33 , 35 , 36) . The MacArthur Competence Assessment Tool for Treatment has been validated with a variety of populations and is among the few instruments for which extensive training materials are available. Nevertheless, even the MacArthur instruments have substantial limitations, such as the lack of empirical documentation of the psychometric equivalence of tailored versions, the need for substantial training and reliability documentation (particularly when used for research purposes), and the probable need for further study and refinement of the subscales for appreciation and reasoning. The lack of a predetermined cutoff separating capacity and incapacity is less a limitation than an intended feature of the MacArthur instruments; they were designed not as stand-alone tools for capacity assessment but rather as aids to capacity assessment. In any case, factors unique to certain contexts or populations will make other instruments preferable in some situations. Table 3 provides recommendations about instruments for use in various situations or settings.

Conclusions

Interest in research on decisional capacity has grown in recent years, in part as a result of concerns about the adequacy of consent procedures in research in which subjects with an elevated risk of having impaired capacity were exposed to more than minimal risk (92) . With increasingly complex research protocols and increasingly sophisticated and sometimes risky treatment options, and with an aging population at risk of having cognitive impairment and therefore impaired decisional capacity (13) , there is an undeniable need for reliable and valid capacity assessment methods.

1. Faden RR, Beauchamp TL, King NMP: A History and Theory of Informed Consent. New York, Oxford University Press, 1986Google Scholar

2. Roth LH, Meisel A, Lidz CW: Tests of competency to consent to treatment. Am J Psychiatry 1977; 134:279–284Google Scholar

3. Grisso T, Appelbaum PS: Assessing Competence to Consent to Treatment: A Guide for Physicians and Other Health Professionals. New York, Oxford University Press, 1998Google Scholar

4. Dunn LB, Jeste DV: Enhancing informed consent for research and treatment. Neuropsychopharmacology 2001; 24:595–607Google Scholar

5. Wirshing DA, Wirshing WC, Marder SR, Liberman RP, Mintz J: Informed consent: assessment of comprehension. Am J Psychiatry 1998; 155:1508–1511Google Scholar

6. Roberts LW: Informed consent and the capacity for voluntarism. Am J Psychiatry 2002; 159:705–712Google Scholar

7. Appelbaum PS, Grisso T: MacCAT-CR: MacArthur Competence Assessment Tool for Clinical Research. Sarasota, Fla, Professional Resource Press, 2001Google Scholar

8. Drane JF: Competency to give an informed consent: a model for making clinical assessments. JAMA 1984; 252:925–927Google Scholar

9. Kim SYH, Karlawish JHT, Caine ED: Current state of research on decision-making competence of cognitively impaired elderly persons. Am J Geriatr Psychiatry 2002; 10:151–165Google Scholar

10. Grisso T: Evaluating Competencies: Forensic Assessments and Instruments, 2nd ed. New York, Kluwer Academic/Plenum, 2003Google Scholar

11. Kapp MB, Mossman D: Measuring decisional capacity: cautions of the construction of a “capacimeter.” Psychol Public Policy Law 1996; 2:73–95Google Scholar

12. Glass KC: Refining definitions and devising instruments: two decades of assessing mental competence. Int J Law Psychiatry 1997; 20:5–33Google Scholar

13. Moye J: Assessment of competency and decision making capacity, in Handbook of Assessment in Clinical Gerontology. Edited by Lichtenberg P. New York, Wiley, 1999Google Scholar

14. Michels R: Research on persons with impaired decision making and the public trust (editorial). Am J Psychiatry 2004; 161:777–779Google Scholar

15. UCSD Human Research Protections Program: Decision making capacity guidelines. University of California, San Diego, 2004. Available at http://irb.ucsd.edu/decisional.shtmlGoogle Scholar

16. National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research: The Belmont Report (DHEW Publication OS-78-0012). Washington, DC, US Government Printing Office, 1978Google Scholar

17. Marson DC, Ingram KK: Commentary: competency to consent to treatment: a growing field of research. J Ethics Law Aging 1996; 2:59–63Google Scholar

18. Karlawish JH: Competency in the age of assessment. Lancet 2004; 364:1383–1384Google Scholar

19. Vellinga A, Smit JH, Van Leeuwen E, van Tilburg W, Jonker C: Competence to consent to treatment of geriatric patients: judgements of physicians, family members, and the vignette method. Int J Geriatr Psychiatry 2004; 19:645–654Google Scholar

20. Sullivan K: Neuropsychological assessment of mental capacity. Neuropsychol Rev 2004; 14:131–142Google Scholar

21. Anastasi A, Urbina S: Psychological Testing, 7th ed. Upper Saddle River, NJ, Prentice Hall, 1997Google Scholar

22. Saks ER, Dunn LB, Marshall BJ, Nayak GV, Golshan S, Jeste DV: The California Scale of Appreciation: a new instrument to measure the appreciation component of capacity to consent to research. Am J Geriatr Psychiatry 2002; 10:166–174Google Scholar

23. Chopra MP, Weiss D, Stinnett JL, Oslin DW: Treatment-related decisional capacity. Am J Geriatr Psychiatry 2003; 11:257–258Google Scholar

24. Berg JW: Constructing competence: formulating standards of legal competence to make medical decisions. Rutgers Law Rev 1996; 48:345–396Google Scholar

25. Shah A, Mukherjee S: Ascertaining capacity to consent: a survey of approaches used by psychiatrists. Med Sci Law 2003; 43:231–235Google Scholar

26. Markson LJ, Kern DC, Annas GJ, Glantz LH: Physician assessment of patient competence. J Am Geriatr Soc 1994; 42:1074–1080Google Scholar

27. Edelstein B: Challenges in the assessment of decision-making capacity. J Aging Studies 2000; 14:423–437Google Scholar

28. Marson DC, Ingram KK, Cody HA, Harrell LE: Assessing the competency of patients with Alzheimer’s disease under different legal standards. Arch Neurol 1995; 52:949–954Google Scholar

29. Westen D, Rosenthal R: Quantifying construct validity: two simple measures. J Pers Soc Psychol 2003; 84:608–618Google Scholar

30. Carpenter WT, Gold JM, Lahti AC, Queern CA, Conley RR, Bartko JJ, Kovnick J, Appelbaum PS: Decisional capacity for informed consent in schizophrenia research. Arch Gen Psychiatry 2000; 57:533–538Google Scholar

31. Kim SYH, Caine ED, Currier GW, Leibovici A, Ryan JM: Assessing the competence of persons with Alzheimer’s disease in providing informed consent for participation in research. Am J Psychiatry 2001; 158:712–717Google Scholar

32. Karlawish JH, Casarett DJ, James BD: Alzheimer’s disease patients’ and caregivers’ capacity, competency, and reasons to enroll in an early-phase Alzheimer’s disease clinical trial. J Am Geriatr Soc 2002; 50:2019–2024Google Scholar

33. Casarett DJ, Karlawish JH, Hirschman KB: Identifying ambulatory cancer patients at risk of impaired capacity to consent to research. J Pain Symptom Manage 2003; 26:615–624Google Scholar

34. Kovnick JA, Appelbaum PS, Hoge SK, Leadbetter RA: Competence to consent to research among long-stay inpatients with chronic schizophrenia. Psychiatr Serv 2003; 54:1247–1252Google Scholar

35. Appelbaum PS, Grisso T, Frank E, O’Donnell S, Kupfer DJ: Competence of depressed patients to consent to research. Am J Psychiatry 1999; 156:1380–1384Google Scholar

36. Palmer BW, Dunn LB, Appelbaum PS, Mudaliar S, Thal L, Henry R, Golshan S, Jeste DV: Assessment of capacity to consent to research among older persons with schizophrenia, Alzheimer disease, or diabetes mellitus: comparison of a 3-item questionnaire with a comprehensive standardized capacity instrument. Arch Gen Psychiatry 2005; 62:726–733Google Scholar

37. Moser D, Schultz S, Arndt S, Benjamin ML, Fleming FW, Brems CS, Paulsen JS, Appelbaum PS, Andreasen NC: Capacity to provide informed consent for participation in schizophrenia and HIV research. Am J Psychiatry 2002; 159:1201–1207Google Scholar

38. Buckles VD, Powlishta KK, Palmer JL, Coats M, Hosto T, Buckley A, Morris JC: Understanding of informed consent by demented individuals. Neurology 2003; 61:1662–1666Google Scholar

39. Morris JC: The Clinical Dementia Rating (CDR): current version and scoring rules. Neurology 1993; 43:2412–2414Google Scholar

40. DeRenzo EG, Conley RR, Love RC: Assessment of capacity to give consent to research participation: state-of-the-art and beyond. J Health Care Law Policy 1998; 1:66–87Google Scholar

41. Joffe S, Cook EF, Clearly PD, Clark JW, Weeks JC: Quality of Informed Consent: a new measure of understanding among research subjects. J Natl Cancer Inst 2001; 93:139–147Google Scholar

42. Miller CK, O’Donnell D, Searight R, Barbarash RA: The Deaconess Informed Consent Comprehension Test: an assessment tool for clinical research subjects. Pharmacotherapy 1996; 16:872–878Google Scholar

43. Roth LH, Lidz CW, Meisel A, Soloff PH, Kaufman K, Spiker DG, Foster FG: Competency to decide about treatment or research: an overview of some empirical data. Int J Law Psychiatry 1982; 5:29–50Google Scholar

44. Miller R, Willner HS: The two-part consent form: a suggestion for promoting free and informed consent. N Engl J Med 1974; 290:964–966Google Scholar

45. Stanley B, Guido J, Stanley M, Shortell D: The elderly patient and informed consent: empirical findings. JAMA 1984; 252:1302–1306Google Scholar

46. Stanley B, Stanley M: Psychiatric patients’ comprehension of consent information. Psychopharmacol Bull 1987; 23:375–377Google Scholar

47. Schmand B, Gouwenberg B, Smit JH, Jonker C: Assessment of mental competency in community-dwelling elderly. Alzheimer Dis Assoc Disord 1999; 13:80–87Google Scholar

48. Sachs G, Stocking C, Stern R, Cox D: Ethical aspects of dementia research: informed consent and proxy consent. Clin Res 1994; 42:403–412Google Scholar

49. Dunn LB, Lindamer LA, Palmer BW, Golshan S, Schneiderman LJ, Jeste DV: Improving understanding of research consent in middle-aged and elderly patients with psychotic disorders. Am J Geriatr Psychiatry 2002; 10:142–150Google Scholar

50. Dunn LB, Lindamer LA, Palmer BW, Schneiderman LJ, Jeste DV: Enhancing comprehension of consent for research in older patients with psychosis: a randomized study of a novel educational strategy. Am J Psychiatry 2001; 158:1911–1913Google Scholar

51. Bean G, Nishisato S, Rector NA, Glancy G: The psychometric properties of the Competency Interview Schedule. Can J Psychiatry 1994; 39:368–376Google Scholar

52. Cea CD, Fisher CB: Health care decision-making by adults with mental retardation. Ment Retard 2003; 41:78–87Google Scholar

53. Draper RJ, Dawson D: Competence to consent to treatment: a guide for the psychiatrist. Can J Psychiatry 1990; 35:285–289Google Scholar

54. Hoffman BF, Srinivasan J: A study of competence to consent to treatment in a psychiatric hospital. Can J Psychiatry 1992; 37:179–182Google Scholar

55. Edelstein B: Hopemont Capacity Assessment Interview Manual and Scoring Guide. Morgantown, WV, West Virginia University, 1999Google Scholar

56. Pruchno RA, Smyer MA, Rose MS, Hartman-Stein PE, Henderson-Laribee DL: Competence of long-term care residents to participate in decisions about their medical care: a brief, objective assessment. Gerontologist 1995; 35:622–629Google Scholar

57. Moye J, Karel MJ, Azar AR, Gurrera RJ: Capacity to consent to treatment: empirical comparison of three instruments in older adults with and without dementia. Gerontologist 2004; 44:166–175Google Scholar

58. Etchells E, Darzins P, Silberfeld M, Singer PA, McKenny J, Naglie G, Katz M, Guyatt GH, Molloy W, Strang D: Assessment of patient capacity to consent to treatment. J Gen Intern Med 1999; 14:27–34Google Scholar

59. Fitten LJ, Waite MS: Impact of medical hospitalization on treatment decision-making capacity in the elderly. Arch Intern Med 1990; 150:1717–1721Google Scholar

60. Fitten LJ, Lusky R, Hamann C: Assessing treatment decision-making capacity in elderly nursing home residents. J Am Geriatr Soc 1990; 38:1097–1104Google Scholar

61. Grisso T, Appelbaum PS: The MacArthur Treatment Competence Study, III: abilities of patients to consent to psychiatric and medical treatments. Law Hum Behav 1995; 19:149–174Google Scholar

62. Appelbaum PS, Grisso T: The MacArthur Treatment Competence Study, I: mental illness and competence to consent to treatment. Law Hum Behav 1995; 19:105–126Google Scholar

63. Grisso T, Appelbaum PS, Mulvey EP, Fletcher K: The MacArthur Treatment Competence Study, II: measures of abilities related to competence to consent to treatment. Law Hum Behav 1995; 19:127–148Google Scholar

64. Grisso T, Appelbaum PS: Mentally ill and non-mentally-ill patients’ abilities to understand informed consent disclosures for medication. Law Hum Behav 1991; 15:377–388Google Scholar

65. Dellasega C, Frank L, Smyer M: Medical decision-making capacity in elderly hospitalized patients. J Ethics Law Aging 1996; 2:65–74Google Scholar

66. Grisso T, Appelbaum PS: Comparison of standards for assessing patients’ capacities to make treatment decisions. Am J Psychiatry 1995; 152:1033–1037Google Scholar

67. Grisso T, Appelbaum PS, Hill-Fotouhi C: The MacCAT-T: A clinical tool to assess patients’ capacities to make treatment decisions. Psychiatr Serv 1997; 48:1415–1419Google Scholar

68. Palmer BW, Nayak GV, Dunn LB, Appelbaum PS, Jeste DV: Treatment-related decision-making capacity in middle-aged and older patients with psychosis: a preliminary study using the MacCAT-T and HCAT. Am J Geriatr Psychiatry 2002; 10:207–211Google Scholar

69. Raymont V, Bingley W, Buchanan A, David AS, Hayward P, Wessely S, Hotopf M: Prevalence of mental incapacity in medical inpatients and associated risk factors: cross-sectional study. Lancet 2004; 364:1421–1427Google Scholar

70. Palmer BW, Dunn LB, Appelbaum PS, Jeste DV: Correlates of treatment-related decision-making capacity among middle-aged and older patients with schizophrenia. Arch Gen Psychiatry 2004; 61:230–236Google Scholar

71. Vollman J, Bauer A, Danker-Hopfe H, Helmchen H: Competence of mentally ill patients: a comparative empirical study. Psychol Med 2003; 33:1463–1471Google Scholar

72. Lapid MI, Rummans TA, Poole KL, Pankratz S, Maurer MS, Rasmussen KG, Philbrick KL, Appelbaum PS: Decisional capacity of severely depressed patients requiring electroconvulsive therapy. J ECT 2003; 19:67–72Google Scholar

73. Janofsky JS, McCarthy RJ, Folstein MF: The Hopkins Competency Assessment Test: a brief method for evaluating patients’ capacity to give informed consent. Hosp Community Psychiatry 1992; 43:132–136Google Scholar

74. Barton CD, Mallik HS, Orr WB, Janofsky JS: Clinicians’ judgment of capacity of nursing home patients to give informed consent. Psychiatr Serv 1996; 47:956–960Google Scholar

75. Royall DR, Cordes J, Polk M: Executive control and the comprehension of medical information by elderly retirees. Exp Aging Res 1997; 23:301–313Google Scholar

76. Holzer JC, Gansler DA, Moczynski NP, Folstein MF: Cognitive functions in the informed consent evaluation process: a pilot study. J Am Acad Psychiatry Law 1997; 25:531–540Google Scholar

77. Bassett SS: Attention: neuropsychological predictor of competency in Alzheimer’s disease. J Geriatr Psychiatry Neurol 1999; 12:200–205Google Scholar

78. Tomoda A, Yasumiya R, Sumiyama T, Tsukada K, Hayakawa T, Matsubara K, Kitamura F, Kitamura T: Validity and reliability of Structured Interview for Competency Incompetency Assessment Testing and Ranking Inventory. J Clin Psychol 1997; 53:443–450Google Scholar

79. Kitamura F, Tomoda A, Tsukada K, Tanaka M, Kawakami I, Mishima S, Kitamura T: Method for assessment of competency to consent in the mentally ill: rationale, development, and comparison with the medically ill. Int J Law Psychiatry 1998; 21:223–244Google Scholar

80. Marson DC, McInturff B, Hawkins L, Bartolucci A, Harrell LE: Consistency of physician judgments of capacity to consent in mild Alzheimer’s disease. J Am Geriatr Soc 1997; 45:453–457Google Scholar

81. Marson DC, Earnst KS, Jamil F, Bartolucci A, Harrell LE: Consistency of physicians’ legal standard and personal judgments of competency in patients with Alzheimer’s disease. J Am Geriatr Soc 2000; 48:911–918Google Scholar

82. Marson DC, Hawkins L, McInturff B, Harrell LE: Cognitive models that predict physician judgments of capacity to consent in mild Alzheimer’s disease. J Am Geriatr Soc 1997; 45:458–464Google Scholar

83. Marson D, Cody HA, Ingram KK, Harrell LE: Neuropsychological predictors of competency in Alzheimer’s disease using a rational reasons legal standard. Arch Neurol 1995; 52:955–959Google Scholar

84. Marson DC, Chatterjee A, Ingram KK, Harrell LE: Toward a neurological model of competency: cognitive predictors of capacity to consent in Alzheimer’s disease using three different legal standards. Neurology 1996; 46:666–672Google Scholar

85. Earnst KS, Marson D, Harrell LE: Cognitive models of physicians’ legal standard and personal judgments of competency in patients with Alzheimer’s disease. J Am Geriatr Soc 2000; 48:919–927Google Scholar

86. Dymek MP, Atchison P, Harrell L, Marson DC: Competency to consent to medical treatment in cognitively impaired patients with Parkinson’s disease. Neurology 2001; 56:17–24Google Scholar

87. Roth M, Tym E, Mountjoy CQ, Huppert FA, Hendrie H, Verma S, Goddard R: CAMDEX: a standardised instrument for the diagnosis of mental disorder in the elderly with special reference to the early detection of dementia. Br J Psychiatry 1986; 149:698–709Google Scholar

88. Stanley B, Stanley M, Guido J, Garvin L: The functional competency of elderly at risk. Gerontologist 1988; 28:53–58Google Scholar

89. Bean G, Nishisato S, Rector NA, Glancy G: The assessment of competence to make a treatment decision: an empirical approach. Can J Psychiatry 1996; 41:85–92Google Scholar

90. Folstein MF, Folstein SE, McHugh PR: “Mini-Mental State”: a practical method for grading the cognitive state of patients for the clinician. J Psychiatr Res 1975; 12:189–198Google Scholar

91. Kim SY, Caine ED: Utility and limits of the Mini Mental State Examination in evaluating consent capacity in Alzheimer’s disease. Psychiatr Serv 2002; 53:1322–1324Google Scholar

92. Office for Protection From Research Risks, Division of Human Subject Protections: Evaluation of Human Subject Protections in Schizophrenia Research Conducted by the University of California Los Angeles. Los Angeles, University of California, 1994Google Scholar