Measuring the Impact of Medical Research: Moving From Outputs to Outcomes

Abstract

Billions of dollars are spent every year to support medical research, with a substantial percentage coming from charitable foundations. To justify these expenditures, some measure of the return on investment would be useful, particularly one aligned with the intended ultimate outcome of this scientific effort: the amelioration of disease. The current mode of reporting on the success of medical research is output based, with an emphasis on measurable productivity. This approach falls short in many respects and may be contributing to the well-described efficacy-effectiveness gap in clinical care. The author argues for an outcomes-based approach and describes the steps involved, using an adaptation of the logic model. A shift in focus to the outcomes of our work would provide our funders with clearer mission-central return-on-investment feedback, would make explicit the benefits of science to an increasingly skeptical public, and would serve as a compass to guide the scientific community in playing a more prominent role in reducing the efficacy-effectiveness gap. While acknowledging the enormous complexity involved with the implementation of this approach on a large scale, the author hopes that this essay will encourage some initial steps toward this aim and stimulate further discussion of this concept.

Every year, more than $70 billion is spent on medical research in the United States alone, with a significant percentage of this funding coming from government agencies and charitable foundations (1) . While these grants have contributed to impressive advances throughout medicine, quantifying the specific impact of this funding on the dual aims of disease amelioration and prevention represents an open challenge. How can we in the medical research community best report to taxpayers and philanthropists on the societal value produced by the monies entrusted to us? Or, to put it bluntly, what exactly is the return on investment for these research funds?

One approach to this question involves standard economic analyses (2) . Indeed, using calculations to convert the impact of medical research on life expectancy and morbidity into dollars and cents, a strong argument can be made for the financial value of an investment in research (3 , 4) . In fact, some estimate that the economic benefit of medical research has been “exceptional,” with an estimated return to society of $70 trillion dollars since 1970 (5) . Economic analyses like these, when combined with assessments of the importance of scientific inquiry to society (6 – 8) , have been critical in justifying the expenditure of federal funds, particularly during tight fiscal periods. Yet these financial outcome measures seem removed from the core mission of academic medical research, which is by its nature a nonprofit enterprise. The potential financial payback to society does not seem to be a primary motivator to most medical scientists (9) . Nor is it the type of outcome sought by the philanthropic agencies that increasingly fund our work (10 , 11) . In this sense, medical research entities are akin to not-for-profit “human services” agencies, where making dollars from dollars is not the primary goal.

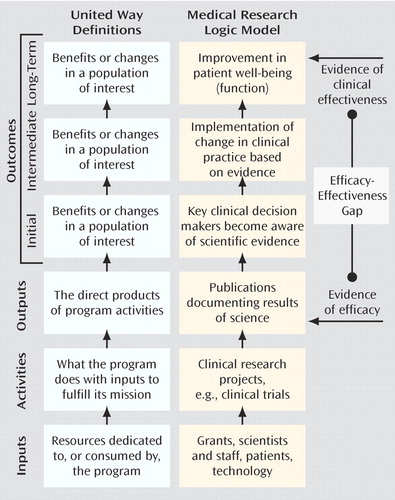

A second approach to return-on-investment reporting is provided by United Way in a helpful guide for nonprofit agencies (12) . Their heuristic, which is based on a larger literature on performance-based management and program evaluation (13) , begins by recognizing that all organizations use various types of inputs (personnel, funding, equipment, etc.) to carry out organizational activities ( Figure 1 ). Most organizations then report on their outputs , often in the form of a simple numerical tabulation of their activities. By measuring instead the impact of these activities on the end-user, that is, how these activities contributed to the organization’s goals, one can better gauge the organization’s success. The measurement of outcomes is the term applied to this impact assessment.

a Adapted from United Way (12). Used by permission, United Way of America.

In this essay I argue that the current mode of reporting on the success of medical research is output based, whereas to optimally use the funds provided to us, an outcomes-based approach is needed. In addition to providing a clearer set of return-on-investment metrics to those who support our work, focusing on outcomes will force us to assess our current success (or lack thereof) in achieving our aims of cure or prevention of illness. While the general concept of outcomes assessment is applicable to all levels of research, from bench to bedside, examining the impact of basic medical research is a substantially more difficult task. In an effort to reduce this complexity, the focus of this article is on the area of medical research most proximal to clinicians: large-scale efficacy studies of therapeutic interventions. In addition, the principles presented here, though applicable to all fields of medicine, are of particular value to psychiatry, as funding for research in our field has historically lagged behind that provided for other disease states (14 , 15) . Framing the benefits of our work in terms of outcomes would provide a compelling argument for an expansion of resources for mental health research, helping to reverse this funding disparity. Thus, while I use the broad term “medical research” throughout, I provide examples specific to our work in psychiatry wherever possible.

Current State of the Art: The Output-Based Model

As we consider the application of the United Way model to medical research, it becomes apparent that the current mode of reporting on our activities is output-based ( Figure 1 ). The annual report to funding sources on research progress is largely a tallying of the quantity of our activities and our outputs. Typically it includes the number of research subjects enrolled (and their demographic profile), the number of projects completed, the number of abstracts presented at scientific meetings, and the number of manuscripts published in peer-reviewed journals. In some cases we might also document the additional “inputs” acquired with these funds, including the payment of research assistant salaries or the purchase of additional instrumentation. By measuring these inputs acquired and outputs achieved, the granting agency can presumably assess how the money is being used and how vigorously the scientist is pursuing his or her research objectives.

A similar model is used for internal evaluations of a scientist’s success in determining readiness for academic promotion. While attempts to integrate other criteria (e.g., devotion to clinical care or teaching) have received increasing attention (16 , 17) , academic productivity remains the prime determinant of promotion (16 , 18 – 21) . Given the inclination to rely on objective, measurable factors, the number of dollars in grant funding (inputs) and the number of papers published (outputs) are most often at the center of this promotion evaluation.

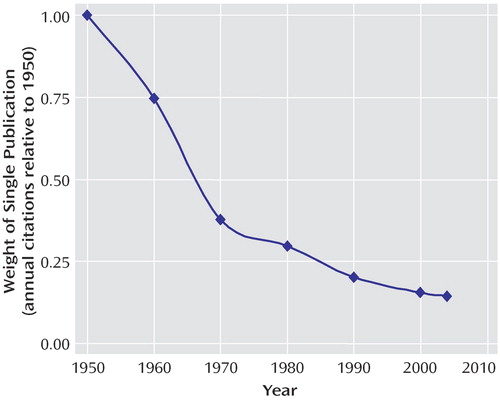

This output-based reward system has likely contributed to one well-documented phenomenon: an explosion in the number of medical manuscripts produced over the past several decades (22 , 23) . The expansion of science has been exponential, with a growth curve similar to “rabbits breeding among themselves” (24) . Since 1950, for example, there have been approximately 15.3 million MEDLINE citations, with the annual number increasing every year. In an interesting form of academic inflation, if one paper published in 1950 were equal to a dollar, a single paper published today would be worth just over 14 cents ( Figure 2 ). While the sheer mass of this medical research output is a testament to the scientific efforts made over the past half century, it is also potentially overwhelming, even when broken down into today’s subspecialty niches. Busy clinicians, administrators, policy makers, and even full-time scientists simply do not have the capacity to keep up with the current literature (25) . Perhaps this helps explain why as many as 46% of medical science manuscripts go completely uncited in the 5-year period after publication (26 , 27) .

Why Measure Outcomes?

It is certainly easier to measure outputs than outcomes: data on dollars of funding and number of papers published are solid, quantifiable, and readily obtained, whereas outcome measures are often “fuzzy” and difficult to assess. As we will see, measuring the downstream impact of our work represents an extraordinary challenge, in large part because of our limited understanding of the typical paths from science to clinical practice. In order to justify this added difficulty, there must be some substantial value added.

There are three strong advantages of measuring outcomes in assessing the impact of our medical research efforts. First, measuring outcomes provides a clear and meaningful message regarding the return on investment to the major funders of our work. Framing the benefits of science not in terms of papers published but rather in terms of its impact on people gives the granting agency a greater, and more immediate, sense of contribution. An outcomes-based report, especially one that estimates improvements in overall well-being or functioning, also supplies these agencies with a common metric for comparison across disease states, allowing for informed decisions on allocation of resources.

Second, these outcome measures serve as a compass to keep our research efforts, individually and collectively, on track. Focusing on outcomes requires an honest assessment of our progress toward our long-term goals. If our aim is to develop a cure for Alzheimer’s disease, how close are we to this end? Are we any closer today than we were 10 years ago? How do we know for sure? Are we closer to developing cures for some diseases than for others? Why? This approach also forces us to reexamine our assumptions about the paths to future success. The degree to which the publication of scientific results in medical journals actually changes clinical practice is largely unknown. Perhaps there are additional avenues, besides peer-reviewed journal publication, that could augment the impact of science on clinical care. Only when considering the communication of our results as an initial outcome step, and not an end point, does this concept become apparent.

Finally, the description of research progress in outcomes-based language makes explicit the societal good embedded within medical research, an important step in improving the attitude of the general public toward medical science. There is currently a sense of doubt about the safety of the treatments we have to offer (28) , an open skepticism toward health proclamations (“yesterday X was good, today X is bad” [29] ), and, more ominously, a rising level of distrust toward medical research (30 , 31) . These unfavorable attitudes, though particularly prominent in some communities (32 , 33) , are increasingly present worldwide (34) . In a recent report, 79% of African Americans and 52% of white Americans indicated a belief that they might be used as “guinea pigs” in medical research without their consent (35) . A high percentage of each group (46% and 35%, respectively) also felt that they may sometimes be exposed to unnecessary risk by their doctor for the sake of science. The response to this crisis in confidence has largely come in the form of increased oversight and monitoring of our work by outside agencies. Engaging and informing the general public about the benefits of our work represents a proactive internal response to this issue.

Moving to an Outcomes-Based Approach

United Way’s approach to outcomes measurement involves the development of a “logic model”—a series of if-then statements describing the path to the desired outcome. The logic model can help us identify some near-term outcomes that can serve as leading indicators of long-term success. Given the estimated delay of 17 years between initial reports of efficacy and the successful implementation of a new therapeutic agent in clinical practice (36 , 37) , it may be some time before the true impact of this research is actually known. Having some early measures of our impact is therefore critical.

While we might gain consensus on an appropriate long-term outcome for our work (e.g., improved mental and physical functioning, improved quality of life), determining reasonable near-term benchmarks is a more difficult proposition. Surprisingly, despite the current enthusiasm for translational research, the steps involved in the clinical implementation of a scientifically validated therapeutic intervention are not well worked out. The path described in this essay represents an initial attempt at describing this complex process, informed by the work of a few groups engaged in this type of program evaluation research (11 , 37 – 39) . It is important to emphasize that this model represents a rough estimate, and through an iterative process it will come to reflect real-world complexity. Also note that this approach does not exclude the use of qualitative methods, including the case study approach, to assess the impact of our work. In fact, these types of detailed analyses, with a focus on examining the dispersion and implementation of science at the single-project (or single-grant) level, have an important complementary role in further refining and improving our understanding of the gap between science and clinical care (11 , 39 – 41) .

Figure 1 outlines the core components of the process: research is published in journals (output); journals are read by physicians and other key clinical decision makers (initial outcome); these clinicians implement a change in practice (intermediate outcome); and a practice change leads to improvement in patients’ lives (long-term outcome). When viewed in this manner, it becomes clear that the relationship between outputs and outcomes is analogous to the relationship between the demonstration of research efficacy and the clinical effectiveness of a particular intervention. Substantial evidence suggests that this gap between efficacy and effectiveness is quite large, as many efficacious treatments are not implemented properly (and often not implemented at all) in the real-world setting of clinical medicine (42 – 46) . From the standpoint of the clinical scientist following an output-based approach, the responsibility for this gap lies squarely on the shoulders of the treating physician. Once the data have been published, any inconsistencies between this evidence and clinical practice represent either a lack of awareness of the evidence or a lack of the clinical acumen necessary to appropriately apply the evidence. The output model essentially suggests that “if you build it (publish it), they will come,” perhaps with the additional proviso, “as long as it is in a decent journal.”

The outcome-based model, on the other hand, suggests that the successful implementation of a new intervention is a shared responsibility and, indeed, should serve as the basis by which we judge the quality of our research efforts. Any failures in the system are partially ours, and we should identify ways that we as scientists might help to fix them. This collaborative responsibility only becomes apparent once we begin to think beyond the outputs, when we begin to assess our success via the near- and long-term outcomes described below.

Initial Outcome: Key Clinical Decision Makers Become Aware of the Research Evidence

How can we best get research findings to those responsible for implementing clinical change? If considered at all, this step is likely viewed in terms of the journal’s “impact factor,” a value based on the number of other articles that cite articles published in the journal over a 2-year period (47) . The impact factor has been shown to be highly correlated with both scientific selectivity (manuscript acceptance rate) (48) and perceived journal quality among scientists (49) . Because it is seen to be reflective of academic excellence, the impact factor of the journal in which one publishes is increasingly being used as an outcome measure, for both external reporting and internal advancement (50 – 52) .

Impact factor alone, however, is unlikely to be predictive of subsequent clinical implementation. First, a journal’s impact factor measures the lateral spread of its output to other scientists, not the vertical spread of that information to the clinical domain. As most clinicians are not publishing papers as medical scientists, their reading habits are not necessarily captured by a citation-based measure (53) . Studies of the reading habits of psychiatrists (54) and surgeons (55) show that the most widely read journals in these fields do not all have high impact factors. The correlation between the perceived quality of a journal and its impact factor is significantly higher among research physicians than among physicians in clinical practice (49) . Similarly, the relationship between the perceived importance of a journal and its impact factor is tighter in “scientific” subfields than in clinical domains such as nursing (56) . In fact, one study found an inverse correlation between a journal’s perceived clinical usefulness and its impact factor (57) . Finally, Grant and colleagues (37) found that nearly 75% of publications included in comprehensive clinical guidelines came from clinically focused journals, a disproportionate number when compared with the citation pattern of biomedical articles in general.

Perhaps more important, the information used by clinicians to implement change in practice may not come from a journal publication at all (38) . The medical journal is only one node in a complex network of information sources available to clinicians in practice, which now includes professional meetings, continuing medical education (CME) seminars, discussions with colleagues, visits from pharmaceutical representatives, textbooks, newspapers, Web sites, and even feedback from patients (55 , 58 , 59) . Surveys indicate that clinicians have only a few hours per week to read the literature and that they tend to read only a handful of the 15,000 biomedical journals currently in publication (53 – 55 , 59 – 64) . It is therefore not surprising that clinicians may turn to secondary and tertiary channels of information to learn about recent scientific evidence (59 , 65) . The journal impact factor alone is unlikely to capture the degree to which these multiple channels transmit scientific information to clinicians and decision makers.

Measuring the penetration of research into the clinical domain is thus a complex but important first step in assessing the impact of science on patient health. As we begin to look beyond the impact factor for better measures of information awareness, three broad approaches hold the most promise:

We will continue to rely on scientometrics, the discipline involved with measuring the communication of scientific information, to provide us with tools to track our outcomes. This approach is most appealing to the individual scientist, as scientometric values could be made available online, allowing for easy, low-cost outcomes reporting. While there are no perfect measures at present, one novel option uses a combination of the journal impact factor and a number of other measures, including the citation rates of our research in nonjournal media, such as newspapers, patents, and clinical guidelines (56 , 58 , 66) .

Ideally, we would like to know the actual number of people reading (and understanding) our work. With paper-only journals, actual readership is difficult to assess, especially on an article-by-article basis. As more and more clinicians access journal articles via the Internet, our ability to track readership will increase. Although currently this information is held by the publishers, the increasing use of open-source scientific journals, as well as new regulations requiring public access to research funded by the National Institutes of Health, may soon provide the individual scientist with ready access to this Web tracking data.

Surveys could be used to gauge penetrance of information. These could range from very small scale (tracking poster readership at a conference) to very large scale (tracking nationwide awareness of a specific set of research findings or reports). This type of assessment might be particularly relevant as a follow-up to the publication of large multicenter trials, treatment recommendations, and algorithms.

Intermediate Outcome: Change in Clinical Practice Is Implemented

Measuring awareness of a particular research finding is insufficient, for one simple reason: there is a tremendous difference between knowing and doing. As Wilson and colleagues noted, “The naive assumption that when research evidence is made available it is routinely accessed by practitioners, appraised, and then applied in practice is now largely discredited. While awareness of a practice guideline or a research-based recommendation is important, it is rarely, by itself, sufficient to change practice” (67) . Measuring the degree of implementation—the actual change in practice stimulated by a particular scientific finding—is therefore a critical intermediate indicator of success.

The rate of implementation of our existing scientific successes is suboptimal. Despite the ubiquity of published guidelines and treatment algorithms, examples of the underutilization of efficacious treatments are abundant across many medical disciplines, including psychiatry. Less than half of patients diagnosed with depression (68) or panic disorder (69) in the primary care setting receive any pharmacological treatment. Similar figures have been reported for patients treated primarily by psychiatrists, including those with psychotic disorders (70) . Even when patients receive medication, they often receive inadequate doses for inadequate durations. Less than a third of all patients with schizophrenia, for example, are treated with a recommended dose of an antipsychotic (71). The implementation rates of scientifically vetted psychosocial interventions are even more abysmal. In one study, only 17.6% of patients with a depressive or anxiety disorder received appropriate counseling (72) . Among patients with schizophrenia who had regular contact with their families, family psychoeducation was provided to as few as 10% of patients and their families (71) . A comparably low percentage of patients receive other valuable interventions, including vocational rehabilitation and social skills training (73) .

There are several causes of this implementation gap, including many beyond the direct control of clinicians or scientists. Economic and regulatory factors can have a tremendous role in the usage rates of specific treatments or technologies (74) . Even consumer demand can shape practice patterns, as evidenced by the increased rate of third-party reimbursement for complementary and alternative therapies (75) . These factors notwithstanding, there are a number of ways in which medicine as a field can act to improve concordance between evidence and clinical practice. Most of the focus thus far has been on the clinical end of the process, including the use of feedback to encourage performance at or above established benchmarks and enforcement of practice guidelines through financial incentives or penalties (76) . By better understanding the processes by which change is accepted or rejected by the clinical end user, we in the research community can also play a role in these programs of process improvement.

As described by Harvey Fineberg in a 1985 Institute of Medicine report, there are 10 key factors that influence the diffusion of new technology (or therapeutics) into the clinical domain (77) . Half of them relate specifically to the innovation itself. Understanding these five technology-related factors can help scientists increase the implementation of their findings:

The channel of communication. Some sources of information simply have more influence on decision making than others. The degree to which physicians are influenced by information from one journal as compared with another, presumed to be related to journal impact factor, is actually largely unknown. Other forms of information delivery, including direct personal contact, may have a greater impact than academic publications. Indeed, although physicians indicate that they are swayed more by published guidelines and CME events than by advertisements, industry literature, and pharmaceutical representatives (59) , there is some evidence to suggest that the latter sources are as influential as the former (78 – 81) . The use of “opinion leaders” does not need to be confined to the pharmaceutical industry. In fact, a recent report highlights the role of word-of-mouth communication (or “buzz marketing”) in reducing the inappropriate use of antibiotics (82) . For clinical scientists who are interested in seeing their research findings implemented in the clinical domain, these data suggest that a more active approach to information dissemination may be necessary (53) .

The coherence of the findings with prevailing clinical beliefs. The driving factor in determining the perceived scientific validity of a research article is its coherence with the physician’s own experience, cited as being “always” or “often” important by 88% of those physicians surveyed, followed by discussion with colleagues (73%) and reliance on the peer review process (48%) (59) . Preconceived beliefs, based on either personal experience or prevailing paradigms, can therefore serve as stern gatekeepers to the entry of new ideas. The limited use of cognitive behavior therapy for the positive symptoms of schizophrenia, for example, may partially be accounted for by the belief among some psychiatrists that brain-based symptoms require biologically based treatments (83) . Thus, if a finding requires thinking that is “outside the box,” preparatory information may be necessary before the evidence is accepted and implemented.

The quality and quantity of the evidence. Scientists will be reassured to know that the quality of the evidence is still an important factor in determining whether a change in practice is implemented. The publication of a well-conducted, large-scale, randomized, controlled trial in a respected journal has been shown to have an impact on subsequent prescribing practice (84 , 85) . This may be truer for the implementation of a new therapy than the abandonment of an old one, where other factors, including sensationalistic media coverage, can play a role (86) . Somewhat surprisingly, the data on the impact of evidence quality are sparse. Several important questions remain largely unexamined, including the relative impact of a number of smaller studies reporting similar results as compared with a single large-scale trial.

The nature of the change. If the change in practice is easy to learn, fits well within the existing clinical structure, and is relatively inexpensive, it is more likely to be instituted. If any of these qualities are not met, the intervention either will not be used at all or will be modified (simplified) by end users to fit their means (87) . In the latter case, the revised intervention may or may not retain the efficacy of the original innovation. For example, faithful adherence to a manualized treatment model has been shown to be related to better clinical outcomes for a variety of mental health interventions, including individual psychotherapy (88 , 89) , assertive community treatment (90) , and family-based therapeutic approaches (91) . These studies suggest that interventions designed to be congruent with current clinical realities may see better translation from laboratory to clinic (92) .

The impact of the change on key clinical branch points. Physicians are more likely to implement a novel therapy (or use a novel technology) if it helps at an important clinical branch point or improves a symptom that is unaided by existing treatments. There are few (if any) examples of the use of technology in this manner within current psychiatric practice. Moving forward, we should consider these areas of unmet clinical need, as they serve as important guides in the development of novel technologies. The use of deep brain stimulation in treatment-refractory patients with obsessive-compulsive disorder (93) and the potential use of neuroimaging and genetics in predicting medication response (94) are two exciting examples of this approach that hold promise in the near future.

By taking a more active stance toward the development and promotion of technology, we raise the likelihood that the technology will actually be used. Measuring the implementation rate is admittedly a challenge. At a local level, physician surveys or even electronic medical record searches may provide some information on the use of a particular treatment. More accurate information would likely require access to a database of physician prescribing practices or medication insurance claims. The impact of a specific large-scale trial or a set of clinical practice guidelines could be assessed by comparing prescribing practices before and after publication. An even better approach, one that would provide information on the relationship between awareness and implementation, would involve an assessment of educational activities and prescribing practices in a closed system, such as a large health maintenance organization (HMO). By having a subsample of physicians within the HMO access all journals and CME materials via monitored Web-based portals, one could quantify actual reading patterns and then cross-reference this with actual changes in prescribing or orders for diagnostic tests.

Long-Term Outcome: Implementation of Change Leads to Patient Benefit

Even when scientifically supported interventions are implemented in the community, their effectiveness often falls short of the efficacy demonstrated in clinical trials (42) . There are a number of reasons for this phenomenon, including lower rates of medication adherence, a higher prevalence of adverse events due to drug-drug interactions, and the impact of multiple comorbid conditions (89) . Thus, the real-world performance may not replicate that demonstrated in a “clean” and controlled research environment, further contributing to the efficacy-effectiveness gap.

Developing clinical trials with greater real-world validity (i.e., effectiveness trials) represents another way in which the medical research community can play a greater role in narrowing this gap (95) . This research strategy aims to maintain scientific rigor while incorporating all of the “confounds” associated with clinical reality. The recent paper by Roy-Byrne and colleagues (96) demonstrating the feasibility and effectiveness of a primary care-based intervention for panic disorder is a nice example of this approach. Other large-scale studies using similar methods for bipolar disorder (97) and schizophrenia (98) are currently under way. As these trials are completed, we may begin to develop measures of clinical efficacy that better reflect the eventual therapeutic effectiveness of a particular intervention.

In measuring patient benefit, we should increasingly consider the use of general measures of functioning or well-being, such as the disability-adjusted life-year (DALY) (99) , the quality-adjusted life-year (QALY), and the Medical Outcomes Study 36-item Short-Form Health Survey (SF-36) (100) . Although these measures may seem vague and unsatisfying when compared with disease-specific scores, they contain tremendous comparative value for reporting purposes. They serve as a common metric to allow funders to assess the value of an investment across disease states. How else could they determine whether the development of a drug providing a 12% decrease in patients’ Beck Depression Inventory scores is a better return on their investment than a psychosocial intervention yielding an 18% improvement in patients’ Yale-Brown Obsessive Compulsive Scale scores? Furthermore, given the significant impact of major psychiatric illness on overall functioning, framing our outcomes in this manner highlights the potential economic benefit of psychiatric treatment in general, providing a persuasive message not only to the funders of our research but also to third-party payers of clinical care.

Conclusions

It is by measuring three outcomes—awareness, implementation, and patient benefit—that we can provide a real sense of the clinical return on an investment in medical research. Admittedly, our ability to provide this information is limited at present. There are many areas where interesting questions outnumber practical answers, in part because of our surprisingly limited understanding of the path from scientific innovation to clinical use. In addition, the costs involved with developing better measures, such as the use of “market research” to measure the impact of publications on awareness and prescribing practices, would likely be nontrivial. The solutions to these limitations will require creative thought and a new level of cross-disciplinary collaboration.

This should not dissuade us from taking the initial steps, however imperfect, toward examining the real outcomes of the work we are doing. The shift to this mode of reporting will have substantial benefits for our ability to track progress, raise funds, and improve the overall standing of medical research in the public eye. Furthermore, this change from an output-based to an outcomes-based perspective is a critical step in examining the role that clinical scientists can play in decreasing the well-documented efficacy-effectiveness gap. Without this shift, there will be little incentive for researchers to look beyond the outputs of their work and little understanding of the specific areas where they can help in the implementation of this work.

We are entering a new era of accountability. Several broad institutions, from business to education to medicine, are being called upon to quantify their quality. We in medical science can also begin this type of self-evaluation, with the goal of improving not only the quality of our efforts but also our capacity to report on what we’ve done.

1. Rosenberg LE: Exceptional economic returns on investments in medical research. Med J Aust 2002; 177:368–371Google Scholar

2. Robinson JC: The end of asymmetric information. J Health Polit Policy Law 2001; 26:1045–1053Google Scholar

3. Passell P: Exceptional Returns: The Economic Value of America’s Investment in Medical Research. New York, Funding First, Lasker Foundation, May 2000Google Scholar

4. Buxton M, Hanney S, Jones T: Estimating the economic value to societies of the impact of health research: a critical review. Bull World Health Organ 2004; 82:733–739Google Scholar

5. Murphy K, Topel R: Diminishing returns? the costs and benefits of improving health. Perspect Biol Med 2003; 46:S108–S128Google Scholar

6. Comroe JH, Dripps RD: Scientific basis for the support of biomedical science. Science 1976; 192:105–111Google Scholar

7. Garfield E: How can we prove the value of basic research? Curr Contents 1979; 40:5–9Google Scholar

8. Garfield E: The economic impact of research and development. Curr Contents 1981; 51:5–15Google Scholar

9. Wright SM, Beasley BW: Motivating factors for academic physicians within departments of medicine. Mayo Clin Proc 2004; 79:1145–1150Google Scholar

10. Gruman J, Prager D: Health research philanthropy in a time of plenty: a strategic agenda. Health Aff (Millwood) 2002; 21:265–269Google Scholar

11. Hanney SR, Grant J, Wooding S, Buxton MJ: Proposed methods for reviewing the outcomes of health research: the impact of funding by the UK’s “Arthritis research campaign.” Health Res Policy Syst 2004; 2:4Google Scholar

12. United Way: Measuring Program Outcomes: A Practical Approach. Alexandria, Va, United Way of America, 1996Google Scholar

13. Wholey J: Evaluation: Promise and Performance. Washington, DC, Urban Institute Press, 1979Google Scholar

14. Pincus HA, Fine T: The “anatomy” of research funding of mental illness and addictive disorders. Arch Gen Psychiatry 1992; 49:573–579Google Scholar

15. Brousseau RT, Langill D, Pechura CM: Are foundations overlooking mental health? Health Aff (Millwood) 2003; 22:222–229Google Scholar

16. Atasoylu AA, Wright SM, Beasley BW, Cofrancesco J Jr, Macpherson DS, Partridge T, Thomas PA, Bass EB: Promotion criteria for clinician-educators. J Gen Int Med 2003; 18:711–716Google Scholar

17. Levinson W, Rubenstein A: Mission critical: integrating clinician-educators into academic medical centers. N Engl J Med 1999; 341:840–844Google Scholar

18. Thomas PA, Diener-West M, Canto MI, Martin DR, Post WS, Streiff MB: Results of an academic promotion and career path survey of faculty at the Johns Hopkins University School of Medicine. Acad Med 2004; 79:258–264Google Scholar

19. Batshaw ML, Plotnick LP, Petty BG, Woolf PK, Mellits ED: Academic promotion at a medical school: experience at Johns Hopkins University School of Medicine. N Engl J Med 1988; 318:741–747Google Scholar

20. Gjerde C: Faculty promotion and publication rates in family medicine: 1981 versus 1989. Fam Med 1994; 26:361–365Google Scholar

21. Vydareny KH, Waldrop SM, Jackson VP, Manaster BJ, Nazarian GK, Reich CA, Ruzal-Shapiro CB: The road to success: factors affecting the speed of promotion of academic radiologists. Acad Radiol 1999; 6:564–569Google Scholar

22. Arndt KA: Information excess in medicine: overview, relevance to dermatology, and strategies for coping. Arch Derm 1992; 128:1249–1256Google Scholar

23. Price DJ: Little Science, Big Science, and Beyond. New York, Columbia University Press, 1986Google Scholar

24. Fernandez-Cano A, Torralbo M, Vallejo M: Reconsidering Price’s model of scientific growth: an overview. Scientometrics 2004; 61:301–321Google Scholar

25. Milbank Memorial Fund: Better Information, Better Outcomes: The Use of Health Technology Assessment and Clinical Effectiveness Data in Health Care Purchasing Decisions in the United Kingdom and the United States. New York, Milbank Memorial Fund, July 2000Google Scholar

26. Hamilton DP: Publishing by—and for?—the numbers. Science 1990; 250:1331–1332Google Scholar

27. Pendlebury DA: Science, citation, and funding. Science 1991; 251:1410–1411Google Scholar

28. Kennedy D: Clinical trials and public trust. Science 2004; 306:1649Google Scholar

29. Patterson RE, Satia JA, Kristal AR, Neuhouser ML, Drewnowski A: Is there a consumer backlash against the diet and health message? J Am Diet Assoc 2001; 101:37–41Google Scholar

30. Kelch RP: Maintaining the public trust in clinical research. N Engl J Med 2002; 346:285–287Google Scholar

31. Charney DS, Innis RB, Nestler EJ, Davis KL, Nemeroff CB, Weinberger DR: Increasing public trust and confidence in psychiatric research. Biol Psychiatry 1999; 46:1–2Google Scholar

32. Boulware LE, Cooper LA, Ratner LE, LaVeist TA, Powe NR: Race and trust in the health care system. Pub Health Rep 2003; 118:358–365Google Scholar

33. Gamble VN: A legacy of distrust: African Americans and medical research. Am J Prev Med 1993; 9:35–38Google Scholar

34. Asai A, Ohnishi M, Nishigaki E, Sekimoto M, Fukuhara S, Fukui T: Focus group interviews examining attitudes toward medical research among the Japanese: a qualitative study. Bioethics 2004; 18:448–470Google Scholar

35. Corbie-Smith G, Thomas SB, St George DM: Distrust, race, and research. Arch Intern Med 2002; 162:2458–2463Google Scholar

36. Institute of Medicine: Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC, National Academy Press, March 2001Google Scholar

37. Grant J, Cottrell R, Cluzeau F, Fawcett G: Evaluating “payback” on biomedical research from papers cited in clinical guidelines: applied bibliometric study. BMJ 1999; 320:1107–1111Google Scholar

38. Buxton M, Hanney S, Packwood T, Roberts S, Youll P: Assessing benefits from Department of Health and National Health Service research and development. Public Money Management 2000; 20:29–34Google Scholar

39. Buxton M, Hanney S: How can payback from health services research be assessed? Health Serv Res Policy 1996; 1:35–43Google Scholar

40. Molas-Gallart J, Tang P, Morrow S: Assessing the non-academic impact of grant-funded socioeconomic research: results from a pilot study. Res Eval 2000; 9:171–182Google Scholar

41. Lavis J, Ross S, McLeod C, Gildiner A: Measuring the impact of health research. J Health Serv Res Policy 2003; 8:165–170Google Scholar

42. Wells KB: Treatment research at the crossroads: the scientific interface of clinical trials and effectiveness research. Am J Psychiatry 1999; 156:5–10Google Scholar

43. Schoenwald SK, Hoagwood K: Effectiveness, transportability, and dissemination of interventions: what matters when? Psychiatr Serv 2001; 52:1190–1197Google Scholar

44. Glasgow RE, Lictenstein E, Marcus AC: Why don’t we see more translation of health promotion research to practice? rethinking the efficacy-to-effectiveness transition. Am J Pub Health 2003; 93:1261–1267Google Scholar

45. Grant RW, Cagliero E, Dubey AK, Gildesgame C, Chueh HC, Barry MJ, Singer DE, Nathan DM, Meigs JB: Clinical inertia in the management of type 2 diabetes metabolic risk factors. Diabet Med 2004; 21:150–155Google Scholar

46. Torrey EF: PORT: updated treatment recommendations. Schizophr Bull 2004; 30:617Google Scholar

47. Garfield E: The impact factor. Curr Contents 1994; 25:3–7Google Scholar

48. Lee KP, Schotland M, Bachetti P, Bero LA: Association of journal quality indicators with methodological quality of clinical research articles. JAMA 2002; 287:2805–2808Google Scholar

49. Saha S: Impact factor: a valid measure of journal quality? J Med Libr Assoc 2003; 91:42–46Google Scholar

50. Tomlinson S: The research assessment exercise and medical research. BMJ 2000; 320:636–639Google Scholar

51. Figa Talamanca A: The “impact factor” in the evaluation of research. Bull Group Int Rech Sci Stomatol Odontol 2002; 44:2–9Google Scholar

52. Fassoulaki A, Sarantopoulos C, Papilas K, Patris K, Melemeni A: Academic anesthesiologists’ views on the importance of the impact factor of scientific journals: a North American and European survey. Can J Anesth 2001; 48:953–957Google Scholar

53. Coomarasamy A, Gee H, Publicover M, Khan KS: Medical journals and effective dissemination of health research. Health Info Libr J 2001; 18:183–191Google Scholar

54. Jones T, Hanney S, Buxton M, Burns T: What British psychiatrists read. Br J Psychiatry 2004; 185:251–257Google Scholar

55. Schein M, Paladugu R, Sutija VG, Wise L: What American surgeons read: a survey of a thousand fellows of the American College of Surgeons. Curr Surg 2000; 57:252–258Google Scholar

56. Lewison G: Researchers’ and users’ perceptions of the relative standing of biomedical papers in different journals. Scientometrics 2002; 53:229–240Google Scholar

57. Lauritsen J, Moller AM: Publications in anesthesia journals: quality and clinical relevance. Anesth Analg 2004; 99:1486–1491Google Scholar

58. Lewison G: From biomedical research to health improvement. Scientometrics 2002; 54:179–192Google Scholar

59. Trelle S: Information management and reading habits of German diabetologists: a questionnaire survey. Diabetologia 2002; 45:764–774Google Scholar

60. Vickery CE, Cotugna N: Journal reading habits of dieticians. J Am Diet Assoc 1992; 92:1510–1512Google Scholar

61. Skinner K, Miller B: Journal reading habits of registered nurses. J Contin Educ Nurs 1989; 20:170–173Google Scholar

62. Saint S, Christakis DA, Saha S, Elmore JG, Welsh DE, Baker P, Koepsell TD: Journal reading habit of internists. J Gen Intern Med 2000; 15:881–884Google Scholar

63. Burke DT, DeVito MC, Schneider JC, Julien S, Judelson AL: Reading habits of physical medicine and rehabilitation resident physicians. Am J Phys Med Rehabil 2004; 83:551–559Google Scholar

64. Burke DT, Judelson AL, Schneider JC, DeVito MC, Latta D: Reading habits of practicing physiatrists. Am J Phys Med Rehabil 2002; 81:779–787Google Scholar

65. Stross JK, Harlan WR: The dissemination of new medical information. JAMA 1979; 241:2622–2624Google Scholar

66. Nagpaul PS, Roy S: Constructing a multi-objective measure of research performance. Scientometrics 2003; 56:383–402Google Scholar

67. Wilson P, Richardson R, Sowden AJ, Evans D: Getting evidence into practice, in Undertaking Systematic Reviews of Research Effectiveness: CRD’s Guidance for those Carrying Out or Commissioning Reviews. CRD Report 4, 2nd ed. Centre for Reviews and Dissemination, University of York, York, UK, 2000Google Scholar

68. Katon WJ, Simon G, Russo J, Von Korff M, Lin EH, Ludman E, Ciechanowski P, Bush T: Quality of depression care in a population-based sample of patients with diabetes and major depression. Med Care 2004; 42:1222–1229Google Scholar

69. Stein MB, Sherbourne CD, Craske MG, Means-Christensen A, Bystritsky A, Katon W, Sullivan G, Roy-Byrne PP: Quality of care for primary care patients with anxiety disorders. Am J Psychiatry 2004; 161:2230–2237Google Scholar

70. Wang PS, Demler O, Kessler RC: Adequacy of treatment for serious mental illness in the United States. Am J Pub Health 2002; 92:92–98Google Scholar

71. Lehman AF, Steinwachs DM: Patterns of usual care for schizophrenia: initial results from the Schizophrenia Patient Outcomes Research Team (PORT). Schizophr Bull 1998; 24:11–20Google Scholar

72. Young AS, Klap R, Sherbourne CD, Wells KB: The quality of care for depressive and anxiety disorders in the United States. Arch Gen Psychiatry 2001; 58:55–61Google Scholar

73. West JC, Wilk JE, Olfson M, Rae DS, Marcus S, Narrow WE, Pincus HA, Regier DA: Patterns and quality of treatment for patients with schizophrenia in routine psychiatric practice. Psychiatr Serv 2005; 56:283–291Google Scholar

74. McNeil BJ: Hidden barriers to improvement in the quality of care. N Engl J Med 2001; 345:1612–1620Google Scholar

75. Steyer TE, Freed GL, Lantz PM: Medicaid reimbursement for alternative therapies. Altern Ther Health Med 2002; 8:84–88Google Scholar

76. Casalino L, Gillies RR, Shortell SM, Schmittdiel JA, Bodenheimer T, Robinson JC, Rundall T, Oswald N, Schauffler H, Wang MC: External incentives, information technology, and organized processes to improve health care quality for patients with chronic diseases. JAMA 2003; 289:434–441Google Scholar

77. Fineberg HV: Effects of clinical evaluation on the diffusion of medical technology, in Assessing Medical Technologies. Edited by Institute of Medicine. Washington, DC, National Academy Press, 1985Google Scholar

78. Avorn J, Chen M, Hartley R: Scientific versus commercial sources of influence on the prescribing behavior of physicians. Am J Med 1982; 73:4–8Google Scholar

79. Caplow T: Market attitudes: a research report from the medical field. Harv Bus Rev 1954; 30:105–112Google Scholar

80. Mintzes B, Barer ML, Kravitz RL, Kazanjian A, Bassett K, Lexchin J, Evans RG, Pan R, Marion SA: Influence of direct to consumer pharmaceutical advertising and patients’ requests on prescribing decisions: two site cross sectional survey. BMJ 2002; 324:278–279Google Scholar

81. Wazana A: Physicians and the pharmaceutical industry: is a gift ever just a gift? JAMA 2000; 283:373–380Google Scholar

82. Holdford DA: Using buzz marketing to promote ideas, services, and products. J Am Pharm Assoc 2004; 44:387–395Google Scholar

83. Jorm AF, Korten AE, Jacomb PA, Rodgers B, Pollitt P: Beliefs about the helpfulness of interventions for mental disorders: a comparison of general practitioners, psychiatrists, and clinical psychologists. Aust N Z J Psychiatry 1997; 31:844–851Google Scholar

84. Calvo BC, Rubinstein A: Influence of new evidence on prescrition patterns. J Am Board Fam Pract 2002; 15:457–462Google Scholar

85. Fineberg HV: Clinical evaluation: how does it influence medical practice? Bull Cancer 1987; 74:333–346Google Scholar

86. Brunt ME, Murray MD, Hui SL, Kesterson J, Perkins AJ, Tierney WM: Mass media release of medical research results: an analysis of antihypertensive drug prescribing in the aftermath of the calcium channel blocker scare of March 1995. J Gen Intern Med 2003; 18:84–94Google Scholar

87. Berwick DM: Disseminating innovations in health care. JAMA 2003; 289:1969–1975Google Scholar

88. Weisz B, Weiss JR: The impact of methodological factors on child psychotherapy outcome research: a meta-analysis for researchers. J Abnorm Child Psychol 1990; 18:639–670Google Scholar

89. Clarke GN: Improving the transition from basic efficacy research to effectiveness studies: methodological issues and procedures. J Consult Clin Psychol 1995; 63:718–725Google Scholar

90. McHugo GJ, Drake RE, Teague GB, Xie H: Fidelity to assertive community treatment and client outcomes in the New Hampshire dual disorders study. Psychiatr Serv 1999; 50:818–824Google Scholar

91. Curtis NM, Ronan KR, Borduin CM: Multisystemic treatment: a meta-analysis of outcome studies. J Fam Psychol 2004; 18:411–419Google Scholar

92. Weisz JR, Donenberg GR, Han SS, Weiss B: Bridging the gap between laboratory and clinic in child and adolescent psychotherapy. J Consult Clin Psychol 1995; 63:688–701Google Scholar

93. Rauch SL, Greenberg BD, Cosgrove GR: Neurosurgical treatments and deep brain stimulation, in Comprehensive Textbook of Psychiatry, 8th ed. Edited by Sadock BJ, Sadock VA. Philadelphia, Lippincott Williams Wilkins, 2005, pp 2983–2990Google Scholar

94. Bertolino A, Caforio G, Blasi G, De Candia M, Latorre V, Petruzzella V, Altamura M, Nappi G, Papa S, Callicott JH, Mattay VS, Bellomo A, Scarabino T, Weinberger DR, Nardini M: Interaction of COMT Val 108/158 Met genotype and olanzapine treatment on prefrontal cortical function in patients with schizophrenia. Am J Psychiatry 2004; 161:1798–1805 Google Scholar

95. Roy-Byrne PP, Sherbourne CD, Craske MG, Stein MB, Katon W, Sullivan G, Means-Christensen A, Bystritsky A: Moving treatment from clinical trials to the real world. Psychiatr Serv 2003; 54:327–332Google Scholar

96. Roy-Byrne PP, Craske MG, Stein MB, Sullivan G, Bystritsky A, Katon W, Golinelli D, Sherbourne CD: A randomized effectiveness trial of cognitive-behavioral therapy and medication for primary care panic disorder. Arch Gen Psychiatry 2005; 62:290–298Google Scholar

97. Bauer MS, Williford WO, Dawson EE, Akiskal HS, Altshuler L, Fye C, Gelenberg A, Glick H, Kinosian B, Sajatovic M: Principles of effectiveness trials and their implementation in VA Cooperative Study #430: “Reducing the efficacy-effectiveness gap in bipolar disorder.” J Affect Dis 2001; 67:61–78Google Scholar

98. Swartz MS, Perkins DO, Stroup TS, McEvoy JP, Nieri JM, Haak DC: Assessing clinical and functional outcomes in the Clinical Antipsychotic Trials of Intervention Effectiveness (CATIE) schizophrenia trial. Schizophr Bull 2003; 29:33–43Google Scholar

99. Murray CJ, Lopez AD: Global mortality, disability, and the contribution of risk factors: global burden of disease study. Lancet 1997; 349:1436–1442Google Scholar

100. Ware JJ, Sherbourne CD: The MOS 36-item short-form health survey (SF-36), I: conceptual framework and item selection. Med Care 1992; 30:473–483Google Scholar